The right side contains the term p(y t|v, y 1, …, y t-1), which is a vector of probabilities of all the words, conditioned on the vector representation and the outputs at the previous time steps. The left side refers to the probability of the output sequence, conditioned on the given input sequence.

Let's deconstruct what that equation means. The goal of the LSTM is to estimate the following conditional probability. The second cell will be a function of both the vector representation v, as well as the output of the previous cell. Mathematically speaking, this means that we compute probabilities for each of the words in the vocabulary, and choose the argmax of the values. The cell's job is to take in the vector representation v and decide which word in its vocabulary is the most appropriate for the output response. The decoder is another RNN, which takes in the final hidden state vector of the encoder and uses it to predict the words of the output reply. By this logic, the final hidden state vector of the encoder RNN can be thought of as a pretty accurate representation of the whole input text. For example, the hidden state vector at the third time step will be a function of the first three words. As you remember, an RNN contains a number of hidden state vectors, which each represent information from the previous time steps. Let’s look at how this works at a more detailed level. The decoder’s job is to take that representation and generate a variable length text that best responds to it. From a high level, the encoder’s job is to encapsulate the information of the input text into a fixed representation. In 2014, Ilya Sutskever, Oriol Vinyals, and Quoc Le published the seminal work in this field with a paper called “Sequence to Sequence Learning with Neural Networks.” This paper showed great results in machine translation specifically, but Seq2Seq models have grown to encompass a variety of NLP tasks.Ī sequence-to-sequence model is composed of two main components: an encoder RNN and a decoder RNN (If you’re a little shaky on RNNs, check out my previous blog post for a refresher). Deep Learning ApproachĬhatbots that use deep learning are almost all using some variant of a sequence-to-sequence (Seq2Seq) model. As we’ll see in this post, deep learning is one of the most effective methods in tackling this tough task. For all the progress we have made in the field, we too often get chatbot experiences like this.Ĭhatbots are too often not able to understand our intentions, have trouble getting us the correct information, and are sometimes just exasperatingly difficult to deal with. It’s safe to say that modern chatbots have trouble accomplishing all these tasks. The chatbot needs to be able to understand the intentions of the sender’s message, determine what type of response message (a follow-up question, direct response, etc.) is required, and follow correct grammatical and lexical rules while forming the response. This “best” response should either (1) answer the sender’s question, (2) give the sender relevant information, (3) ask follow-up questions, or (4) continue the conversation in a realistic way.

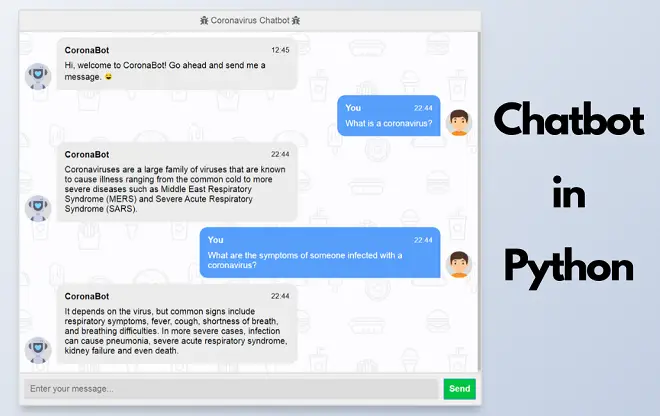

Problem Spaceįrom a high level, the job of a chatbot is to be able to determine the best response to any given message that it receives. In this post, we’ll be looking at how we can use a deep learning model to train a chatbot on my past social media conversations in hope of getting the chatbot to respond to messages the way that I would. Chatbots have been around for a decent amount of time (Siri released in 2011), but only recently has deep learning been the go-to approach to the task of creating realistic and effective chatbot interaction. Facebook has been heavily investing in FB Messenger bots, which allow small businesses and organizations to create bots to help with customer support and frequently asked questions. In this post, we’ll be looking more at chatbots that operate solely on the textual front.

These products all have auditory interfaces where the agent converses with you through audio messages. They can help you get directions, check the scores of sports games, call people in your address book, and can accidentally make you order a $170 dollhouse. Chatbots are “computer programs which conduct conversations through auditory or textual methods.” Apple’s Siri, Microsoft’s Cortana, Google Assistant, and Amazon’s Alexa are four of the most popular conversational agents today.

0 kommentar(er)

0 kommentar(er)